STOMA FULL SLIDE (probability of IISc bangalore)

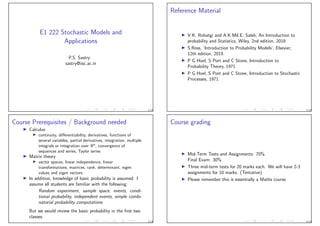

- 1. E1 222 Stochastic Models and Applications P.S. Sastry sastry@iisc.ac.in 1/13 Reference Material ◮ V.K. Rohatgi and A.K.Md.E. Saleh, An Introduction to probability and Statistics, Wiley, 2nd edition, 2018 ◮ S.Ross, ‘Introduction to Probability Models’, Elsevier, 12th edition, 2019. ◮ P G Hoel, S Port and C Stone, Introduction to Probability Theory, 1971. ◮ P G Hoel, S Port and C Stone, Introduction to Stochastic Processes, 1971. 2/13 Course Prerequisites / Background needed ◮ Calculus ◮ continuity, differentiability, derivatives, functions of several variables, partial derivatives, integration, multiple integrals or integration over ℜn, convergence of sequences and series, Taylor series ◮ Matrix theory ◮ vector spaces, linear independence, linear transformations, matrices, rank, determinant, eigen values and eigen vectors ◮ In addition, knowledge of basic probability is assumed. I assume all students are familiar with the following: Random experiment, sample space, events, condi- tional probability, independent events, simple combi- natorial probability computations But we would review the basic probability in the first two classes. 3/13 Course grading ◮ Mid-Term Tests and Assignments: 70% Final Exam: 30% ◮ Three mid-term tests for 20 marks each. We will have 2-3 assignments for 10 marks. (Tentative) ◮ Please remember this is essentially a Maths course 4/13

- 2. Probability Theory ◮ Probability Theory – branch of mathematics that deals with modeling and analysis of random phenomena. ◮ Random Phenomena – “individually not predictable but have a lot of regularity at a population level” ◮ E.g., Recommender systems are useful for Amazon or Netflix because at a population level customer behaviour can be predicted well. ◮ Example random phenomena: Tossing a coin, rolling a dice etc – familiar to you all ◮ It is also useful in many engineering systems, e.g., for taking care of noise. ◮ Probability theory is also needed for Statistics that deals with making inferences from data. 6/13 ◮ In many engineering problems one needs to deal with random inputs where probability models are useful ◮ Dealing with dynamical systems subjected to noise (e.g., Kalman filter) ◮ Policies for decision making under uncertainty ◮ Pattern Recognition, prediction from data . . . ◮ We may use probability models for analysing algorithms. (e.g., average case complexity of algorithms) ◮ We may deliberately introduce randomness in an algorithm (e.g., ALOHA protocol, Primality testing) . . . This is only a ‘sample’ of possible application scenarios! 7/13 Review of basic probability We assume all of you are familiar with the terms: random experiment, outcomes of random experiment, sample space, events etc. We use the following Notation: ◮ Sample space – Ω Elements of Ω are the outcomes of the random experiment We write Ω = {ω1, ω2, · · · } when it is countable ◮ An event is, by definition, a subset of Ω ◮ Set of all possible events – F ⊂ 2Ω (power set of Ω) Each event is a subset of Ω 8/13 Probability axioms Probability (or probability measure) is a function that assigns a number to each event and satisfies some properties. Formally, P : F → ℜ satisfying A1 Non-negativity: P(A) ≥ 0, ∀A ∈ F A2 Normalization: P(Ω) = 1 A3 σ-additivity: If A1, A2, · · · ∈ F satisfy Ai ∩ Aj = φ, ∀i 6= j then P(∪∞ i=1Ai ) = ∞ X i=1 P(Ai ) Events satisfying Ai ∩ Aj = φ, ∀i 6= j are said to be mutually exclusive 9/13

- 3. Probability axioms P : F → ℜ, F ⊂ 2Ω (Events are subsets of Ω) A1 P(A) ≥ 0, ∀A ∈ F A2 P(Ω) = 1 A3 If Ai ∩ Aj = φ, ∀i 6= j then P(∪∞ i=1Ai ) = P∞ i=1 P(Ai ) ◮ For these axioms to make sense, we are assuming (i). Ω ∈ F and (ii). A1, A2, · · · ∈ F ⇒ (∪i Ai ) ∈ F When F = 2Ω this is true. 10/13 Simple consequences of the axioms ◮ Notation: Ac is complement of A. C = A + B implies A, B are mutually exclusive and C is their union. ◮ Let A ⊂ B be events. Then B = A + (B − A). Now we can show P(A) ≤ P(B): P(B) = P (A + (B − A)) = P(A) + P(B − A) ≥ P(A) This also shows P(B − A) = P(B) − P(A) when A ⊂ B. 11/13 ◮ There are many such properties (I assume familiar to you) that can be derived from the axioms. ◮ Here are a few important ones. (Proof is left to you as an exercise!) 12/13 Case of finite Ω – Example ◮ Let Ω = {ω1, · · · , ωn}, F = 2Ω , and P is specified through ‘equally likely’ assumption. ◮ That is, P({ωi }) = 1 n . (Note the notation) ◮ Suppose A = {ω1, ω2, ω3}. Then P(A) = P({ω1}∪{ω2}∪{ω3}) = 3 X i=1 P({ωi }) = 3 n = |A| |Ω| ◮ We can easily see this to be true for any event, A. ◮ This is the usual familiar formula: number of favourable outcomes by total number of outcomes. ◮ Thus, ‘equally likely’ is one way of specifying the probability function (in case of finite Ω). ◮ An obvious point worth remembering: specifying P for singleton events fixes it for all other events. 13/13

- 4. Review of basic probability We use the following Notation: ◮ Sample space – Ω Elements of Ω are the outcomes of the random experiment We write Ω = {ω1, ω2, · · · } when it is countable ◮ An event is, by definition, a subset of Ω ◮ Set of all possible events – F ⊂ 2Ω (power set of Ω) Each event is a subset of Ω For now, we take F = 2Ω (power set of Ω) 1/38 Probability axioms Probability (or probability measure) is a function that assigns a number to each event and satisfies some properties. P : F → ℜ, F ⊂ 2Ω A1 P(A) ≥ 0, ∀A ∈ F A2 P(Ω) = 1 A3 If Ai ∩ Aj = φ, ∀i 6= j then P(∪∞ i=1Ai ) = P∞ i=1 P(Ai ) 2/38 Some consequences of the axioms ◮ 0 ≤ P(A) ≤ 1 ◮ P(Ac ) = 1 − P(A) ◮ If A ⊂ B then, P(A) ≤ P(B) ◮ If A ⊂ B then, P(B − A) = P(B) − P(A) ◮ P(A ∪ B) = P(A) + P(B) − P(A ∩ B) 3/38 Case of finite Ω – Example ◮ Let Ω = {ω1, · · · , ωn}, F = 2Ω , and P is specified through ‘equally likely’ assumption. ◮ That is, P({ωi }) = 1 n . (Note the notation) ◮ Suppose A = {ω1, ω2, ω3}. Then P(A) = P({ω1}∪{ω2}∪{ω3}) = 3 X i=1 P({ωi }) = 3 n = |A| |Ω| ◮ We can easily see this to be true for any event, A. ◮ This is the usual familiar formula: number of favourable outcomes by total number of outcomes. ◮ Thus, ‘equally likely’ is one way of specifying the probability function (in case of finite Ω). ◮ An obvious point worth remembering: specifying P for singleton events fixes it for all other events. 4/38

- 5. Case of Countably infinite Ω ◮ Let Ω = {ω1, ω2, · · · }. ◮ Once again, any A ⊂ Ω can be written as mutually exclusive union of singleton sets. ◮ Let qi , i = 1, 2, · · · be numbers such that qi ≥ 0 and P i qi = 1. ◮ We can now set P({ωi }) = qi , i = 1, 2, · · · . (Assumptions on qi needed to satisfy P(A) ≥ 0 and P(Ω) = 1). ◮ This fixes P for all events: P(A) = P ω∈A P({ω}) ◮ This is how we normally define a probability measure on countably infinite Ω. ◮ This can be done for finite Ω too. 5/38 Example: countably infinite Ω ◮ Consider a random experiment of tossing a biased coin repeatedly till we get a head. We take the outcome of the experiment to be the number of tails we had before the first head. ◮ Here we have Ω = {0, 1, 2, · · · }. ◮ A (reasonable) probability assignment is: P({k}) = (1 − p)k p, k = 0, 1, · · · where p is the probability of head and 0 < p < 1. (We assume you understand the idea of ‘independent’ tosses here). ◮ In the notation of previous slide, qi = (1 − p)i p ◮ Easy to see we have qi ≥ 0 and P∞ i=0 qi = 1. 6/38 Case of uncountably infinite Ω ◮ We would mostly be considering only the cases where Ω is a subset of ℜd for some d. ◮ Note that now an event need not be a countable union of singleton sets. ◮ For now we would only consider a simple intuitive extension of the ‘equally likely’ idea. ◮ Suppose Ω is a finite interval of ℜ. Then we will take P(A) = m(A) m(Ω) where m(A) is length of the set A. ◮ We can use this in higher dimensions also by taking m(·) to be an appropriate ‘measure’ of a set. ◮ For example, in ℜ2 , m(A) denotes area of A, in ℜ3 it would be volume and so on. (There are many issues that need more attention here). 7/38 Example: Uncountably infinite Ω Problem: A rod of unit length is broken at two random points. What is the probability that the three pieces so formed would make a triangle. ◮ Let us take left end of the rod as origin and let x, y denote the two successive points where the rod is broken. ◮ Then the random experiment is picking two numbers x, y with 0 < x < y < 1. ◮ We can take Ω = {(x, y) : 0 < x < y < 1} ⊂ ℜ2 . ◮ For the pieces to make a triangle, sum of lengths of any two should be more than the third. 8/38

- 6. ◮ The lengths are: x, (y − x), (1 − y). So we need x + (y − x) > (1 − y) ⇒ y > 0.5 x + (1 − y) > (y − x) ⇒ y < x + 0.5; (y − x) + 1 − y > x ⇒ x < 0.5 ◮ So the event of interest is: A = {(x, y) : y > 0.5; x < 0.5; y < x + 0.5} 9/38 ◮ We have Ω = {(x, y) : 0 < x < y < 1} A = {(x, y) : y > 0.5; x < 0.5; y < x + 0.5} ◮ We can visualize it as follows ◮ The required probability is area of A divided by area of Ω which gives the answer as 0.25 10/38 ◮ Everything we do in probability theory is always in reference to an underlying probability space: (Ω, F, P) where ◮ Ω is the sample space ◮ F ⊂ 2Ω set of events; each event is a subset of Ω ◮ P : F → [0, 1] is a probability (measure) that assigns a number between 0 and 1 to every event (satisfying the three axioms). 11/38 Conditional Probability ◮ Let B be an event with P(B) > 0. We define conditional probability, conditioned on B, of any event, A, as P(A | B) = P(A ∩ B) P(B) = P(AB) P(B) ◮ The above is a notation. “A | B” does not represent any set operation! (This is an abuse of notation!) ◮ Given a B, conditional probability is a new probability assignment to any event. ◮ That is, given B with P(B) > 0, we define a new probability PB : F → [0, 1] by PB(A) = P(AB) P(B) 12/38

- 7. ◮ Conditional probability is a probability. What does this mean? ◮ The new function we defined, PB : F → [0, 1], PB(A) = P(AB) P(B) , satisfies the three axioms of probability. ◮ PB(A) ≥ 0 and PB(Ω) = 1. ◮ If A1, A2 are mutually exclusive then A1B and A2B are also mutually exclusive and hence PB(A1 + A2) = P ((A1 + A2)B) P(B) = P(A1B + A2B) P(B) = P(A1B) + P(A2B) P(B) = PB(A1) + PB(A2) ◮ Once we understand condional probability is a new probability assignment, we go back to the ‘standard notation’ 13/38 P(A | B) = P(AB) P(B) ◮ Note P(B|B) = 1 and P(A|B) > 0 only if P(AB) > 0. ◮ Now the ‘new’ probability of each event is determined by what it has in common with B. ◮ If we know the event B has occurred, then based on this knowledge we can readjust probabilities of all events and that is given by the conditional probability. ◮ Intuitively it is as if the sample space is now reduced to B because we are given the information that B has occurred. ◮ This is a useful intuition as long as we understand it properly. ◮ It is not as if we talk about conditional probability only for subsets of B. Conditional probability is also with respect to the original probability space. Every element of F has conditional probability defined. 14/38 P(A | B) = P(AB) P(B) ◮ Suppose P(A | B) > P(A) Does it mean “B causes A”? P(A | B) > P(A) ⇒ P(AB) > P(A)P(B) ⇒ P(AB) P(A) > P(B) ⇒ P(B | A) > P(B) ◮ Hence, conditional probabilities cannot actually capture causal influences. ◮ There are probabilistic methods to capture causation (but far beyond the scope of this course!) 15/38 ◮ In a conditional probability, the conditioning event can be any event (with positive probability) ◮ In particular, it could be intersection of events. ◮ We think of that as conditioning on multiple events. P(A | B, C) = P(A | BC) = P(ABC) P(BC) 16/38

- 8. ◮ The conditional probability is defined by P(A | B) = P(AB) P(B) ◮ This gives us a useful identity P(AB) = P(A | B)P(B) ◮ We can iterate this for multiple events P(ABC) = P(A | BC)P(BC) = P(A | BC)P(B | C)P(C) 17/38 ◮ Let B1, · · · , Bm be events such that ∪m i=1Bi = Ω and Bi Bj = φ, ∀i 6= j. ◮ Such a collection of events is said to be a partition of Ω. (They are also sometimes said to be mutually exclusive and collectively exhaustive). ◮ Given this partition, any other event can be represented as a mutually exclusive union as A = AB1 + · · · + ABm To explain the notation again A = A∩Ω = A∩(B1 ∪· · ·∪Bm) = (A∩B1)∪· · ·∪(A∩Bm) Hence, A = AB1 + · · · + ABm 18/38 Total Probability rule ◮ Let B1, · · · , Bm be a partition of Ω. ◮ Then, for any event A, we have P(A) = P(AB1 + · · · + ABm) = P(AB1) + · · · + P(ABm) = P(A |B1)P(B1) + · · · + P(A |Bm)P(Bm) ◮ The formula (where Bi form a partition) P(A) = X i P(A | Bi )P(Bi ) is known as total probability rule or total probability law or total probability formula. ◮ This is a very useful in many situations. (“arguing by cases”) 19/38 Example: Polya’s Urn An urn contains r red balls and b black balls. We draw a ball at random, note its color, and put back that ball along with c balls of the same color. We keep repeating this process. Let Rn (Bn) denote the event of drawing a red (black) ball at the nth draw. We want to calculate the probabilities of all these events. ◮ It is easy to see that P(R1) = r r+b and P(B1) = b r+b . ◮ For R2 we have, using total probability rule, P(R2) = P(R2 | R1)P(R1) + P(R2 | B1)P(B1) = r + c r + c + b r r + b + r r + b + c b r + b = r(r + c + b) (r + c + b)(r + b) = r r + b = P(R1) 20/38

- 9. ◮ Similarly we can show that P(B2) = P(B1). ◮ One can show by mathematical induction that P(Rn) = P(R1) and P(Bn) = P(B1) forall n. (Left as an exercise for you!) ◮ This does not depend on the value of c! 21/38 Bayes Rule ◮ Another important formula based on conditional probability is Bayes Rule: P(A | B) = P(AB) P(B) = P(B | A)P(A) P(B) ◮ This allows one to calculate P(A | B) if we know P(B | A). ◮ Useful in many applications because one conditional probability may be more easier to obtain (or estimate) than the other. ◮ Often one uses total probability rule to calculate the denominator in the RHS above: P(A | B) = P(B | A)P(A) P(B |A)P(A) + P(B |Ac)P(Ac) 22/38 Example: Bayes Rule Let D and Dc denote someone being diagnosed as having a disease or not having it. Let T+ and T− denote the events of a test for it being positive or negative. (Note that Tc + = T−). We want to calculate P(D|T+). ◮ We have, by Bayes rule, P(D|T+) = P(T+|D)P(D) P(T+|D)P(D) + P(T+|Dc)P(Dc) ◮ The probabilities P(T+|D) and P(T+|Dc ) can be obtained through, for example, laboratory experiments. ◮ P(T+|D) is called the true positive rate and P(T+|Dc ) is called false positive rate. ◮ We also need P(D), the probability of a random person having the disease. 23/38 ◮ Let us take some specific numbers ◮ Let: P(D) = 0.5, P(T+|D) = 0.99, P(T+|Dc ) = 0.05. P(D|T+) = 0.99 ∗ 0.5 0.99 ∗ 0.5 + 0.05 ∗ 0.5 = 0.95 That is pretty good. ◮ But taking P(D) = 0.5 is not realistic. Let us take P(D) = 0.1. P(D|T+) = 0.99 ∗ 0.1 0.99 ∗ 0.1 + 0.05 ∗ 0.9 = 0.69 ◮ Now suppose we can improve the test so that P(T+|Dc ) = 0.01 P(D|T+) = 0.99 ∗ 0.1 0.99 ∗ 0.1 + 0.01 ∗ 0.9 = 0.92 ◮ These different cases are important in understanding the role of false positives rate. 24/38

- 10. ◮ P(D) is the probability that a random person has the disease. We call it the prior probability. ◮ P(D|T+) is the probability of the random person having disease once we do a test and it came positive. We call it the posterior probability. ◮ Bayes rule essentially transforms the prior probability to posterior probability. 25/38 ◮ In many applications of Bayes rule the same generic situation exists ◮ Based on a measurement we want to predict (what may be called) the state of nature. ◮ For another example, take a simple communication system. ◮ D can represent the event that the transmitter sent bit 1. ◮ T+ can represent an event about the measurement we made at the receiver. ◮ We want the probability that bit 1 is sent based on the measurement. ◮ The knowledge we need is P(T+|D), P(T+|Dc) which can be determined through experiment or modelling of channel. 26/38 P(D|T+) = P(T+|D)P(D) P(T+|D)P(D) + P(T+|Dc)P(Dc) ◮ Not all applications of Bayes rule involve a ‘binary’ situation ◮ Suppose D1, D2, D3 are the (exclusive) possibilities and T is an event about a measurement. P(D1|T) = P(T|D1)P(D1) P(T) = P(T|D1)P(D1) P(T|D1)P(D1) + P(T|D2)P(D2) + P(T|D3)P(D3) = P(T|D1)P(D1) P i P(T|Di )P(Di ) 27/38 P(D|T+) = P(T+|D)P(D) P(T+|D)P(D) + P(T+|Dc)P(Dc) ◮ In the binary situation we can think of Bayes rule in a slightly modified form too. P(D|T+) P(Dc|T+) = P(T+|D) P(T+|Dc) P(D) P(Dc) ◮ This is called the odds-likelihood form of Bayes rule (The ratio of P(A) to P(Ac ) is called odds for A) 28/38

- 11. Independent Events ◮ Two events A, B are said to be independent if P(AB) = P(A)P(B) ◮ Note that this is a definition. Two events are independent if and only if they satisfy the above. ◮ Suppose P(A), P(B) > 0. Then, if they are independent P(A|B) = P(AB) P(B) = P(A); similarly P(B|A) = P(B) ◮ This gives an intuitive feel for independence. ◮ Independence is an important (often confusing!) concept. 29/38 Example: Independence A class has 20 female and 30 male course (MTech) students and 6 female and 9 male research (PhD) students. Are gender and degree independent? ◮ Let F, M, C, R denote events of female, male, course, research students ◮ From the given numbers, we can easily calculate the following: P(F) = 26 65 = 2 5 ; P(C) = 50 65 = 10 13 ; P(FC) = 20 65 = 4 13 ◮ Hence we can verify P(F)P(C) = 2 5 10 13 = 4 13 = P(FC) and conclude that F and C are independent. Similarly we can show for others. 30/38 ◮ In this example, if we keep all other numbers same but change the number of male research students to, say, 12 then the independence no longer holds. (26 68 50 68 6= 20 68 ) ◮ One needs to be careful about independence! ◮ We always have an underlying probability space (Ω, F, P) ◮ Once that is given, the probabilities of all events are fixed. ◮ Hence whether or not two events are independent is a matter of ‘calculation’ 31/38 ◮ If A and B are independent then so are A and Bc . ◮ Using A = AB + ABc , we have P(ABc ) = P(A)−P(AB) = P(A)(1−P(B)) = P(A)P(Bc ) ◮ This also shows that Ac and B are independent and so are Ac and Bc . ◮ For example, in the previous problem, once we saw that F and C are independent, we can conclude M and C are also independent (because in this example we are taking Fc = M). 32/38

- 12. ◮ Consider the random experiment of tossing two fair coins (or tossing a coin twice). ◮ Ω = {HH, HT, TH, TT}. Suppose we employ ‘equally likely idea’. ◮ That is, P({HH}) = 1 4 , P({HT}) = 1 4 and so on ◮ Let A = ‘H on 1st toss’ = {HH, HT} (P(A) = 1 2 ) Let B = ‘T on second toss’ = {HT, TT} (P(B) = 1 2 ) ◮ We have P(AB) = P({HT}) = 0.25 ◮ Since P(A)P(B) = 1 2 1 2 = 1 4 = P(AB), A, B are independent. ◮ Hence, in multiple tosses, assuming all outcomes are equally likely implies outcome of one toss is independent of another. 33/38 ◮ In multiple tosses, assuming all outcomes are equally likely is alright if the coin is fair. ◮ Suppose we toss a biased coin two times. ◮ Then the four outcomes are, obviously, not ‘equally likely’ ◮ How should we then assign these probabilities? ◮ If we assume tosses are independent then we can assign probabilities easily. 34/38 ◮ Consider toss of a biased coin: Ω1 = {H, T}, P({H}) = p and P({T}) = 1 − p. ◮ If we toss this twice then Ω2 = {HH, HT, TH, TT} and we assign P({HH}) = p2 , P({HT}) = p(1 − p), P({TH}) = (1 − p)p, P({TT}) = (1 − p)2 . ◮ P({HH, HT}) = p2 + p(1 − p) = p ◮ This assignment ensures that P({HH}) equals product of probability of H on 1st toss and H on second toss. ◮ Essentially, Ω2 is a cartesian product of Ω1 with itself and essentially we used products of the corresponding probabilities. ◮ For any independent repetitions of a random experiment we follow this. (We will look at it more formally when we consider multiple random variables). 35/38 ◮ In many situations calculating probabilities of intersection of events is difficult. ◮ One often assumes A and B are independent to calculate P(AB). ◮ As we saw, if A and B are independent, then P(A|B) = P(A) ◮ This is often used, at an intuitive level, to justify assumption of independence. 36/38

- 13. Independence of multiple events ◮ Events A1, A2, · · · , An are said to be (totally) independent if for any k, 1 ≤ k ≤ n, and any indices i1, · · · , ik, we have P(Ai1 · · · Aik ) = P(Ai1 ) · · · P(Aik ) ◮ For example, A, B, C are independent if P(AB) = P(A)P(B); P(AC) = P(A)P(C); P(BC) = P(B)P(C); P(ABC) = P(A)P(B)P(C) 37/38 Pair-wise independence ◮ Events A1, A2, · · · , An are said to be pair-wise independent if P(Ai Aj ) = P(Ai )P(Aj ), ∀i 6= j ◮ Events may be pair-wise independent but not (totally) independent. ◮ Example: Four balls in a box inscribed with ‘1’, ‘2’, ‘3’ and ‘123’. Let Ei be the event that number ‘i’ appears on a radomly drawn ball, i = 1, 2, 3. ◮ Easy to see: P(Ei ) = 0.5, i = 1, 2, 3. ◮ P(Ei Ej ) = 0.25 (i 6= j) ⇒ pairwise independent ◮ But, P(E1E2E3) = 0.25 6= (0.5)3 38/38 Recap ◮ Everything we do in probability theory is always in reference to an underlying probability space: (Ω, F, P) where ◮ Ω is the sample space ◮ F ⊂ 2Ω set of events; each event is a subset of Ω ◮ P : F → [0, 1] is a probability (measure) that satisfies the three axioms: A1 P(A) ≥ 0, ∀A ∈ F A2 P(Ω) = 1 A3 If Ai ∩ Aj = φ, ∀i 6= j then P(∪∞ i=1Ai ) = P∞ i=1 P(Ai ) PS Sastry, IISc, Bangalore, 2020 1/34 Recap ◮ When Ω = {ω1, ω2, · · · } (is countable), then probability is generally assigned by P({ωi }) = qi , i = 1, 2, · · · , with qi ≥ 0, X i qi = 1 ◮ When Ω is finite with n elements, a special case is qi = 1 n , ∀i. (All outcomes equally likely) PS Sastry, IISc, Bangalore, 2020 2/34

- 14. Recap ◮ Conditional probability of A given (or conditioned on) B is P(A|B) = P(AB) P(B) ◮ This gives us the identity: P(AB) = P(A|B)P(B) ◮ This holds for multiple event, e.g., P(ABC) = P(A|BC)P(B|C)P(C) ◮ Given a partition, Ω = B1 + B2 + · · · + Bm, for any event, A, P(A) = m X i=1 P(A|Bi )P(Bi ) (Total Probability rule) PS Sastry, IISc, Bangalore, 2020 3/34 Recap ◮ Bayes Rule P(D|T) = P(T|D)P(D) P(T|D)P(D) + P(T|Dc)P(Dc) ◮ Bayes rule can be viewed as transforming a prior probability into a posterior probability. PS Sastry, IISc, Bangalore, 2020 4/34 Recap: Independence ◮ Two events A, B are said to be independent if P(AB) = P(A)P(B) ◮ Suppose P(A), P(B) > 0. Then, if they are independent P(A|B) = P(AB) P(B) = P(A); similarly P(B|A) = P(B) ◮ If A, B are independent then so are A&Bc , Ac &B and Ac &Bc . PS Sastry, IISc, Bangalore, 2020 5/34 Independence of multiple events ◮ Events A1, A2, · · · , An are said to be (totally) independent if for any k, 1 ≤ k ≤ n, and any indices i1, · · · , ik, we have P(Ai1 · · · Aik ) = P(Ai1 ) · · · P(Aik ) ◮ For example, A, B, C are independent if P(AB) = P(A)P(B); P(AC) = P(A)P(C); P(BC) = P(B)P(C); P(ABC) = P(A)P(B)P(C) PS Sastry, IISc, Bangalore, 2020 6/34

- 15. Pair-wise independence ◮ Events A1, A2, · · · , An are said to be pair-wise independent if P(Ai Aj ) = P(Ai )P(Aj ), ∀i 6= j ◮ Events may be pair-wise independent but not (totally) independent. ◮ Example: Four balls in a box inscribed with ‘1’, ‘2’, ‘3’ and ‘123’. Let Ei be the event that number ‘i’ appears on a radomly drawn ball, i = 1, 2, 3. ◮ Easy to see: P(Ei ) = 0.5, i = 1, 2, 3. ◮ P(Ei Ej ) = 0.25 (i 6= j) ⇒ pairwise independent ◮ But, P(E1E2E3) = 0.25 6= (0.5)3 PS Sastry, IISc, Bangalore, 2020 7/34 Conditional Independence ◮ Events A, B are said to be (conditionally) independent given C if P(AB|C) = P(A|C)P(B|C) ◮ If the above holds P(A|BC) = P(ABC) P(BC) = P(AB|C)P(C) P(BC) = P(A|C) P(B|C)P(C) P(BC) = P(A|C) ◮ Events may be conditionally independent but not independent. (e.g., ‘independent’ multiple tests for confirming a disease) ◮ It is also possible that A, B are independent but are not conditionally independent given some other event C. PS Sastry, IISc, Bangalore, 2020 8/34 Use of conditional independence in Bayes rule ◮ We can write Bayes rule with multiple conditioning events. P(A|BC) = P(BC|A)P(A) P(BC|A)P(A) + P(BC|Ac)P(Ac) ◮ The above gets simplified if we assume P(BC|A) = P(B|A)P(C|A), P(BC|Ac ) = P(B|Ac )P(C|Ac ) ◮ Consider the old example, where now we repeat the test for the disease. ◮ Take: A = D, B = T1 +, C = T2 +. ◮ Assuming conditional independence we can calculate the new posterior probability using the same information we had about true positive and false positive rate. PS Sastry, IISc, Bangalore, 2020 9/34 ◮ Let us consider the example with P(T+|D) = 0.99, P(T+|Dc ) = 0.05. P(D) = 0.1. ◮ Recall that we got P(D|T+) = 0.69. ◮ Let us suppose the same test is repeated. P(D | T1 +T2 +) = P(T1 +T2 + | D)P(D) P(T1 +T2 + | D)P(D) + P(T1 +T2 + | Dc)P(Dc) = P(T1 + | D)P(T2 + | D)P(D) P(T1 + | D)P(T2 + | D)P(D) + P(T1 + | Dc)P(T2 + | Dc)P(Dc) = 0.99 ∗ 0.99 ∗ 0.1 0.99 ∗ 0.99 ∗ 0.1 + 0.05 ∗ 0.05 ∗ 0.9 = 0.97 PS Sastry, IISc, Bangalore, 2020 10/34

- 16. PS Sastry, IISc, Bangalore, 2020 11/34 Sequential Continuity of Probability ◮ For function, f : ℜ → ℜ, it is continuous at x if and only if xn → x implies f (xn) → f (x). ◮ Thus, for continuous functions, f lim n→∞ xn = lim n→∞ f (xn) ◮ We want to ask whether the probability, which is a function whose domain is F, is also continuous like this. ◮ That is, we want to ask the question P lim n→∞ An ? = lim n→∞ P(An) ◮ For this, we need to first define limit of a sequence of sets. PS Sastry, IISc, Bangalore, 2020 12/34 ◮ For now we define limits of only monotone sequences. (We will look at the general case later in the course) ◮ A sequence, A1, A2, · · · , is said to be monotone decreasing if An+1 ⊂ An, ∀n (denoted as An ↓) ◮ A sequence, A1, A2, · · · , is said to be monotone increasing if An ⊂ An+1, ∀n (denoted as An ↑) An ↓ An ↑ PS Sastry, IISc, Bangalore, 2020 13/34 ◮ Let An ↓. Then we define its limit as lim n→∞ An = ∩∞ k=1Ak ◮ This is reasonable because, when An ↓, we have An ⊂ An−1 ⊂ An−2 · · · and hence, An = ∩n k=1Ak. ◮ Similarly, when An ↑, we define the limit as lim n→∞ An = ∪∞ k=1Ak PS Sastry, IISc, Bangalore, 2020 14/34

- 17. ◮ Let us look at simple examples of monotone sequences of subsets of ℜ. ◮ Consider a sequence of intervals: An = [a, b + 1 n ), n = 1, 2, · · · with a, b ∈ ℜ, a b. a b b+1 b+0.5 ) ) [ . . . ◮ We have An ↓ and lim An = ∩i Ai = [a, b] ◮ Why? – because ◮ b ∈ An, ∀n ⇒ b ∈ ∩i Ai , and ◮ ∀ǫ 0, b + ǫ / ∈ An after some n ⇒ b + ǫ / ∈ ∩i Ai . For example, b + 0.01 / ∈ A101 = [a, b + 1 101 ). PS Sastry, IISc, Bangalore, 2020 15/34 ◮ We have shown that ∩n[a, b + 1 n ) = [a, b] ◮ Similarly we can get ∩n(a − 1 n , b] = [a, b] ◮ Now consider An = [a, b − 1 n ]. a b b-1 b-0.5 ) [ . . . ] ] ◮ Now, An ↑ and lim An = ∪nAn = [a, b). ◮ Why? – because ◮ ∀ǫ 0, ∃n s.t. b − ǫ ∈ An ⇒ b − ǫ ∈ ∪nAn; ◮ but b / ∈ An, ∀n ⇒ b / ∈ ∪nAn. ◮ These examples also show how using countable unions or intersections we can convert one end of an interval from ‘open’ to ‘closed’ or vice versa. PS Sastry, IISc, Bangalore, 2020 16/34 ◮ To summarize, limits of monotone sequences of events are defined as follows An ↓ lim n→∞ An = ∩∞ k=1Ak An ↑ lim n→∞ An = ∪∞ k=1Ak ◮ Having defined the limits, we now ask the question P lim n→∞ An ? = lim n→∞ P(An) where we assume the sequence is monotone. PS Sastry, IISc, Bangalore, 2020 17/34 Theorem: Let An ↑. Then P(limn An) = limn P(An) ◮ Since An ↑, An ⊂ An+1. ◮ Define sets Bi , i = 1, 2, · · · , by B1 = A1, Bk = Ak − Ak−1, k = 2, 3, · · · A 1 A 2 A 3 B 2 B3 PS Sastry, IISc, Bangalore, 2020 18/34

- 18. Theorem: Let An ↑. Then P(limn An) = limn P(An) ◮ Since An ↑, An ⊂ An+1. ◮ Define sets Bi , i = 1, 2, · · · , by B1 = A1, Bk = Ak − Ak−1, k = 2, 3, · · · ◮ Note that Bk are mutually exclusive. Also note that An = ∪n k=1Bk and hence P(An) = n X k=1 P(Bk) ◮ We also have ∪n k=1Ak = ∪n k=1Bk, ∀n and hence ∪∞ k=1 Ak = ∪∞ k=1Bk ◮ Thus we get P(lim n An) = P(∪∞ k=1Ak) = P(∪∞ k=1Bk) = ∞ X k=1 P(Bk) = lim n n X k=1 P(Bk) = lim n P(An) PS Sastry, IISc, Bangalore, 2020 19/34 ◮ We showed that when An ↑, P(limn An) = limn P(An) ◮ We can show this for the case An ↓ also. ◮ Note that if An ↓, then Ac n ↑. Using this and the theorem we can show it. (Left as an exercise) ◮ This property is known as monotone sequential continuity of the probability measure. PS Sastry, IISc, Bangalore, 2020 20/34 ◮ We can think of a simple example to use this theorem. ◮ We keep tossing a fair coin. (We take tosses to be independent). We want to show that never getting a head has probability zero. ◮ The basic idea is simple. ((0.5)n → 0) ◮ But to formalize this we need to specify what is our probability space and then specify what is the event (of never getting a head). ◮ If we toss the coin for any fixed N times then we know the sample space can be {0, 1}N . ◮ But for our problem, we can not put any fixed limit on the number of tosses and hence our sample space should be for infinite tosses of a coin. PS Sastry, IISc, Bangalore, 2020 21/34 ◮ We take Ω as set of all infinite sequences of 0’s and 1’s: Ω = {(ω1, ω2, · · · ) : ωi ∈ {0, 1}, ∀i} ◮ This would be uncountably infinite. ◮ We would not specify F fully. But assume that any subset of Ω specifiable through outcomes of finitely many coin tosses would be an event. ◮ Thus “no head in the first n tosses” would be an event. PS Sastry, IISc, Bangalore, 2020 22/34

- 19. ◮ What P should we consider for this uncountable Ω? We are not sure what to take. ◮ So, let us ask only for some consistency. For any subset of this Ω that is specified only through outcomes of first n tosses, that event should have the same probability as in the finite probability space corresponding to n tosses. ◮ Consider an event here; A = {(ω1, ω2, ....) : ω1 = ω2 = 0} ⊂ Ω A is the event of tails on first two tosses. ◮ We are saying we must have P(A) = (0.5)2 . ◮ Now we can complete problem PS Sastry, IISc, Bangalore, 2020 23/34 ◮ For n = 1, 2, · · · , define An = {(ω1, ω2, ....) : ωi = 0, i = 1, · · · , n} ◮ An is the event of no head in the first n tosses and we know P(An) = (0.5)n . ◮ Note that ∩∞ k=1Ak is the event we want. ◮ Note that An ↓ because An+1 ⊂ An. ◮ Hence we get P(∩∞ k=1Ak) = P(lim n An) = lim n P(An) = lim n (0.5)n = 0 PS Sastry, IISc, Bangalore, 2020 24/34 ◮ The Ω we considered can be corresponded with the interval [0, 1]. ◮ Each element of Ω is an infinite sequence of 0’s and 1’s ω = (ω1, ω2, · · · ), ωi ∈ {0, 1} ∀i ◮ We can put a ‘binary point’ in front and thus consider ω to be a real number between 0 and 1. ◮ That is, we correspond ω with the real number: ω12−1 + ω22−2 + · · · ◮ For example, the sequence (0, 1, 0, 1, 0, 0, 0, · · · ) would be the number: 2−2 + 2−4 = 5/16. ◮ Essentially, every number in [0, 1] can be represented by a binary sequence like this and every binary sequence corresponds to a real number between 0 and 1. ◮ Thus, our Ω can be thought of as interval [0, 1]. ◮ So, uncountable Ω arise naturally if we want to consider infinite repetitions of a random experiment PS Sastry, IISc, Bangalore, 2020 25/34 ◮ The P we considered would be such that probability of an interval would be its length. ◮ Consider the example event we considered earlier A = {(ω1, ω2, ....) : ω1 = ω2 = 0} ⊂ Ω ◮ When we view the Ω as the interval [0, 1], the above is the set of all binary numbers of the form 0.00xxxxxx · · · . ◮ What is this set of numbers? ◮ It ranges from 0.000000 · · · to 0.0011111 · · · . ◮ That is the interval [0, 0.25]. ◮ As we already saw, the probability of this event is (0.5)2 which is the length of this interval PS Sastry, IISc, Bangalore, 2020 26/34

- 20. ◮ We looked at this probability space only for an example where we could use monotone sequential continuity of probability. ◮ But this probability space is important and has lot of interesting properties. Ω = {(ω1, ω2, · · · ) : ωi ∈ {0, 1}, ∀i} ◮ Here, 1 n Pn i=1 ωi the fraction of heads in the first n tosses. ◮ Since we are tossing a fair coin repeatedly, we should expect lim n→∞ 1 n n X i=1 ωi = 1 2 ◮ We expect this to be true for ‘almost all’ sequences in Ω. ◮ That means ‘almost all’ numbers in [0, 1] when expanded as infinite binary fractions, satisfy this property. ◮ This is called Borel’s normal number theorem and is an interesting result about real numbers. PS Sastry, IISc, Bangalore, 2020 27/34 Probability Models ◮ As mentioned earlier, everything in probability theory is with reference to an underlying probability space: (Ω, F, P). ◮ Probability theory starts with (Ω, F, P) ◮ We can say that different P correspond to different models. ◮ Theory does not tell you how to get the P. ◮ The modeller has to decide what P she wants. ◮ The theory allows one to derive consequences or properties of the model. PS Sastry, IISc, Bangalore, 2020 28/34 ◮ Consider the random experiment of tossing a fair coin three times. ◮ We can take Ω = {0, 1}3 and can use the following P1. ω P1({ω}) 0 0 0 1/8 0 0 1 1/8 0 1 0 1/8 0 1 1 1/8 1 0 0 1/8 1 0 1 1/8 1 1 0 1/8 1 1 1 1/8 ◮ Now probability theory can derive many consequences: ◮ The tosses are independent ◮ Probability of 0 or 3 heads is 1/8 while that of 1 or 2 heads is 3/8 PS Sastry, IISc, Bangalore, 2020 29/34 ◮ Now consider a P2 (different from P1) on the same Ω ω P2({ω}) 0 0 0 1/4 0 0 1 1/12 0 1 0 1/12 0 1 1 1/12 1 0 0 1/12 1 0 1 1/12 1 1 0 1/12 1 1 1 1/4 ◮ The consequences now change ◮ The probability that number of heads is 0 or 1 or 2 or 3 are all same and all equal 1/4. ◮ The tosses are not independent PS Sastry, IISc, Bangalore, 2020 30/34

- 21. ◮ We can not ask which is the ‘correct’ probability model here. ◮ Such a question is meaningless as far as probability theory is concerned. ◮ One chooses a model based on application. ◮ If we think tosses are independent then we choose P1. But if we need to model some dependence among tosses, we choose a model like P2. PS Sastry, IISc, Bangalore, 2020 31/34 ◮ The model P2 accommodates some dependence among tosses. ◮ Outcomes of previous tosses affect the current toss. ω P2({ω}) 0 0 0 1/4 (= (1/2)(2/3)(3/4)) 0 0 1 1/12 (= (1/2)(2/3)(1/4)) 0 1 0 1/12 (= (1/2)(1/3)(2/4)) 0 1 1 1/12 1 0 0 1/12 1 0 1 1/12 1 1 0 1/12 1 1 1 1/4 ◮ It is also a useful model. PS Sastry, IISc, Bangalore, 2020 32/34 We next consider the concept of random variables. These allow one to specify and analyze different probability models. This entire course can be considered as studying different random variables. PS Sastry, IISc, Bangalore, 2020 33/34 Random Variable ◮ A random variable is a real-valued function on Ω: X : Ω → ℜ ◮ For example, Ω = {H, T}, X(H) = 1, X(T) = 0. ◮ Another example: Ω = {H, T}3 , X(ω) is numbers of H’s. ◮ A random variable maps each outcome to a real number. ◮ It essentially means we can treat all outcomes as real numbers. ◮ We can effectively work with ℜ as sample space in all probability models PS Sastry, IISc, Bangalore, 2020 34/34

- 22. Recap: Monotone Sequences of Sets ◮ A sequence, A1, A2, · · · , is said to be monotone decreasing if An+1 ⊂ An, ∀n (denoted as An ↓) ◮ Limit of a monotone decreasing sequence is An ↓: lim n→∞ An = ∩∞ k=1Ak ◮ A sequence, A1, A2, · · · , is said to be monotone increasing if An ⊂ An+1, ∀n (denoted as An ↑) ◮ Limit of monotone increasing sequence is An ↑: lim n→∞ An = ∪∞ k=1Ak PS Sastry, IISc, Bangalore, 2020 1/41 Recap: Monotone Sequential Continuity ◮ We showed that P lim n→∞ An = lim n→∞ P(An) when An ↓ or An ↑ PS Sastry, IISc, Bangalore, 2020 2/41 Random Variable ◮ A random variable is a real-valued function on Ω: X : Ω → ℜ ◮ For example, Ω = {H, T}, X(H) = 1, X(T) = 0. ◮ Another example: Ω = {H, T}3 , X(ω) is numbers of H’s. ◮ A random variable maps each outcome to a real number. ◮ It essentially means we can treat all outcomes as real numbers. ◮ We can effectively work with ℜ as sample space in all probability models PS Sastry, IISc, Bangalore, 2020 3/41 ◮ Let (Ω, F, P) be our probability space and let X be a random variable defined in this probability space. ◮ We know X maps Ω into ℜ. ◮ This random variable results in a new probability space: (Ω, F, P) X → (ℜ, B, PX) where ℜ is the new sample space and B ⊂ 2ℜ is the new set of events and PX is a probability defined on B. ◮ For now we will assume that any set of ℜ that we want would be in B and hence is an event. ◮ PX is a new probability measure (which depends on P and X) that assigns probability to different subsets of ℜ. PS Sastry, IISc, Bangalore, 2020 4/41

- 23. ◮ Given a probability space (Ω, F, P), a random variable X (Ω, F, P) X → (ℜ, B, PX) ◮ We define PX: PX(B) = P ({ω ∈ Ω : X(ω) ∈ B}) , B ∈ B Sample Space Real Line X B PS Sastry, IISc, Bangalore, 2020 5/41 ◮ Given a probability space (Ω, F, P), a random variable X (Ω, F, P) X → (ℜ, B, PX) ◮ We define PX: PX(B) = P ({ω ∈ Ω : X(ω) ∈ B}) , B ∈ B ◮ We use the notation [X ∈ B] = {ω ∈ Ω : X(ω) ∈ B} ◮ So, now we can write PX(B) = P([X ∈ B]) = P[X ∈ B] ◮ For the definition of PX to be proper, for each B ∈ B, we must have [X ∈ B] ∈ F. We will assume that. (This is trivially true if F = 2Ω ). ◮ We can easily verify PX is a probability measure. It satisfies the axioms. PS Sastry, IISc, Bangalore, 2020 6/41 ◮ Given a probability space (Ω, F, P), a random variable X ◮ We define PX: PX(B) = P[X ∈ B] = P ({ω ∈ Ω : X(ω) ∈ B}) ◮ Easy to see: PX(B) ≥ 0, ∀B and PX(ℜ) = 1 ◮ If B1 ∩ B2 = φ then PX(B1 ∪ B2) = P[X ∈ B1 ∪ B2] =? P[X ∈ B1∪B2] = P[X ∈ B1]+P[X ∈ B2] = PX(B1)+PX(B2) PS Sastry, IISc, Bangalore, 2020 7/41 ◮ Let us look at a couple of simple examples. ◮ Let Ω = {H, T} and P(H) = p. Let X(H) = 1; X(T) = 0. [X ∈ {0}] = {ω : X(ω) = 0} = {T} [X ∈ [−3.14, 0.552] ] = {ω : −3.14 ≤ X(ω) ≤ 0.552} = {T} [X ∈ (0.62, 15.5)] = {ω : 0.62 X(ω) 15.5} = {H} [X ∈ [−2, 2) ] = Ω ◮ Hence we get PX({0}) = (1 − p) = PX([−3.14, 0.552]) PX((0.6237, 15.5)) = p; PX([−2, 2)) = 1 PS Sastry, IISc, Bangalore, 2020 8/41

- 24. ◮ Let Ω = {H, T}3 = {HHH, HHT, · · · , TTT}. Let P be specified through ‘equally likely’ assignment. Let X(ω) be number of H’s in ω. Thus, X(THT) = 1. (X takes one of the values: 0, 1, 2, or 3) ◮ We can once again write down [X ∈ B] for different B ⊂ ℜ [X ∈ (0, 1] ] = {HTT, THT, TTH}; [X ∈ (−1.2, 2.78) ] = Ω − {HHH} ◮ Hence PX((0, 1]) = 3 8 ; PX((−1.2, 2.78)) = 7 8 PS Sastry, IISc, Bangalore, 2020 9/41 ◮ A random variable defined on (Ω, F, P) results in a new or induced probability space (ℜ, B, PX). ◮ The Ω may be countable or uncountable (even though we looked at only examples of finite Ω). ◮ Thus, we can study probability models by taking ℜ as sample space through the use of random variables. ◮ However there are some technical issues regarding what B we should consider. ◮ We briefly consider this and then move on to studying random variables. PS Sastry, IISc, Bangalore, 2020 10/41 ◮ We want to look at the probability space (ℜ, B, PX). ◮ If we could take B = 2ℜ then everything would be simple. But that is not feasible. ◮ What this means is that if we want every subset of real line to be an event, we cannot construct a probability measure (to satisfy the axioms). PS Sastry, IISc, Bangalore, 2020 11/41 ◮ Let us consider Ω = [0, 1]. ◮ This is the simplest example of uncountable Ω we considered. ◮ We also saw that this sample space comes up when we consider infinite tosses of a coin. ◮ The simplest extension of the idea of ‘equally likely’ is to say probability of an event (subset of Ω) is the length of the event (subset). ◮ But not all subsets of [0, 1] are intervals and length is defined only for intervals. ◮ We can define length of countable union of disjoint intervals to be sum of the lengths of individual intervals. ◮ But what about subsets that may not be countable unions of disjoint intervals ? ◮ Well, we say those can be assigned probability by using the axioms. PS Sastry, IISc, Bangalore, 2020 12/41

- 25. ◮ Thus the question is the following: ◮ Can we construct a function m : 2[0,1] → [0, 1] such that 1. m(A) = length(A) if A ⊂ [0, 1] is an interval 2. m(∪iAi) = P i m(Ai) where Ai ∩ Aj = φ whenever i 6= j, (A1, A2, · · · ⊂ [0, 1]) ◮ The surprising answer is ‘NO’ ◮ This is a fundamental result in real analysis. ◮ Hence for the probability space (ℜ, B, PX) we cannot take B = 2ℜ . (Recall that for countable Ω we can take F = 2Ω ). ◮ Now the question is what is the best B we can have? PS Sastry, IISc, Bangalore, 2020 13/41 σ-algebra ◮ An F ⊂ 2Ω is called a σ-algebra (also called σ-field) on Ω if it satisfies the following: 1. Ω ∈ F 2. A ∈ F ⇒ Ac ∈ F 3. A1, A2, · · · ∈ F ⇒ ∪iAi ∈ F ◮ Thus a σ-algebra is a collection of subsets of Ω that is closed under complements and countable unions (and hence countable intersections because ∩iAi = (∪iAc i )c ). ◮ Note that 2Ω is obviously a σ-algebra ◮ In a Probability space (Ω, F, P), if F 6= 2Ω then we want it to be a σ-algebra. (Why?) PS Sastry, IISc, Bangalore, 2020 14/41 ◮ Easy to construct examples of σ-algebras Let A ⊂ Ω. F = {Ω, φ, A, Ac } is a σ-algebra ◮ For example, with Ω = {1, 2, 3, 4, 5, 6}, F = {Ω, φ, {1, 3, 5}, {2, 4, 6}} is a σ-algebra ◮ Suppose on this Ω we want to make a σ-algebra containing {1, 2} and {3, 4}. {Ω, φ, {1, 2}, {3, 4}, {3, 4, 5, 6}, {1, 2, 5, 6}, {1, 2, 3, 4}, {5, 6}} ◮ This is the ‘smallest’ σ-algebra containing {1, 2}, {3, 4} PS Sastry, IISc, Bangalore, 2020 15/41 ◮ Let F1, F2 be σ-algebras on Ω. ◮ Then, so is F1 ∩ F2. ◮ It is simple to show. (E.g., A ∈ F1 ∩ F2 ⇒ A ∈ F1, A ∈ F2 ⇒ Ac ∈ F1, Ac ∈ F2 ⇒ Ac ∈ F1 ∩ F2) ◮ Let G ⊂ 2Ω . We denote by σ(G) the smallest σ-algebra containing G. ◮ It is defined as the intersection of all σ-algebras containing G (and hence is well defined). PS Sastry, IISc, Bangalore, 2020 16/41

- 26. ◮ Let us get back to the question we started with. ◮ In the probability space (ℜ, B, P) what is the B we should choose. ◮ We can choose it to be the smallest σ-algebra containing all intervals ◮ That is called Borel σ-algebra, B. ◮ It contains all intervals, all complements, countable unions and intersections of intervals and all sets that can be obtained through complements, countable unions and/or intersections of such sets and so on. PS Sastry, IISc, Bangalore, 2020 17/41 Borel σ-algebra ◮ Let G = {(−∞, x] : x ∈ ℜ} ◮ We can define the Borel σ-algebra, B, as the smallest σ-algebra containing G. ◮ We can see that B would contain all intervals. 1. (−∞, x) ∈ B because (−∞, x) = ∪n(−∞, x − 1 n ] 2. (x, ∞) ∈ B because (x, ∞) = (−∞, x]c 3. [x, ∞) ∈ B because [x, ∞) = ∩n(x − 1 n , ∞) 4. (x, y] ∈ B because (x, y] = (−∞, y] ∩ (x, ∞) 5. [x, y] ∈ B because [x, y] = ∩n(x − 1 n , y] 6. [x, y), (x, y) ∈ B, similarly ◮ Thus, σ(G) is also the smallest σ-algebra containing all intervals. PS Sastry, IISc, Bangalore, 2020 18/41 Borel σ-algebra ◮ We have defined B as B = σ ({(−∞, x] : x ∈ ℜ}) ◮ It is also the smallest σ-algebra containing all intervals. ◮ Elements of B are called Borel sets ◮ Intervals (including singleton sets), complements of intervals, countable unions and intersections of intervals, countable unions and intersections of such sets on so on are all Borel sets. ◮ Borel σ-algebra contains enough sets for our purposes. ◮ Are there any subsets of real line that are not Borel? ◮ YES!! Infinitely many non-Borel sets would be there! PS Sastry, IISc, Bangalore, 2020 19/41 Random Variables ◮ Given a probability space (Ω, F, P), a random variable is a real-valued function on Ω. ◮ It essentially results in an induced probability space (Ω, F, P) X → (ℜ, B, PX) where B is the Borel σ-algebra. ◮ We define PX as: for all Borel sets, B ⊂ ℜ, PX(B) = P[X ∈ B] = P ({ω ∈ Ω : X(ω) ∈ B}) ◮ For X to be a random variable, the folowing should also hold [X ∈ B] = {ω ∈ Ω : X(ω) ∈ B} ∈ F, ∀B ∈ B ◮ We always assume this. PS Sastry, IISc, Bangalore, 2020 20/41

- 27. ◮ Let X be a random variable. ◮ It represents a probability model with ℜ as the sample space. ◮ The probability assigned to different events (Borel subsets of ℜ) is PX(B) = P[X ∈ B] = P ({ω ∈ Ω : X(ω) ∈ B}) ◮ How does one represent this probability measure PS Sastry, IISc, Bangalore, 2020 21/41 Distribution function of a random variable ◮ Let X be a random variable. It distribution function is FX : ℜ → ℜ defined by FX(x) = P[X ∈ (−∞, x]] = P({ω ∈ Ω : X(ω) ≤ x}) ◮ We write the event {ω : X(ω) ≤ x} as [X ≤ x]. We follow this notation with any such relation statement involving X e.g., [X 6= 3] represents the event {ω ∈ Ω : X(ω) 6= 3}. ◮ Thus we have FX(x) = P[X ≤ x] = P({ω ∈ Ω : X(ω) ≤ x}) = PX( (−∞, x] ) ◮ The distribution function, FX completely specifies the probability measure, PX. PS Sastry, IISc, Bangalore, 2020 22/41 ◮ The distribution function of X is given by FX(x) = P[X ≤ x] = P({ω ∈ Ω : X(ω) ≤ x}) ◮ This is also sometimes called the cumulative distribution function. ◮ FX is a real-valued function of a real variable. ◮ Let us look at a simple example. PS Sastry, IISc, Bangalore, 2020 23/41 ◮ Consider tossing of a fair coin: Ω = {T, H}, P({T}) = P({H}) = 0.5. ◮ Let X(T) = 0 and X(H) = 1. We want to calculate FX ◮ For this we want the event [X ≤ x], for different x ◮ Let us first look at some examples: [X ≤ −0.5] = {ω : X(ω) ≤ −0.5} = φ [X ≤ 0.25] = {ω : X(ω) ≤ 0.25} = {T} [X ≤ 1.3] = {ω : X(ω) ≤ 1.3} = Ω ◮ Thus we get [X ≤ x] = {ω : X(ω) ≤ x} = φ if x 0 Ω if x ≥ 1 {T} if 0 ≤ x 1 PS Sastry, IISc, Bangalore, 2020 24/41

- 28. ◮ We are considering: Ω = {T, H}, P({T}) = P({H}) = 0.5. ◮ X(T) = 0 and X(H) = 1. We want to calculate FX ◮ We showed [X ≤ x] = {ω : X(ω) ≤ x} = φ if x 0 {T} if 0 ≤ x 1 Ω if x ≥ 1 ◮ Hence FX(x) = P[X ≤ x] is given by FX(x) = 0 if x 0 0.5 if 0 ≤ x 1 1 if x ≥ 1 Please note that x is a ‘dummy variable’ PS Sastry, IISc, Bangalore, 2020 25/41 ◮ We are considering: Ω = {T, H}, P({T}) = P({H}) = 0.5. ◮ X(T) = 0 and X(H) = 1. We want to calculate FX ◮ We showed [X ≤ x] = {ω : X(ω) ≤ x} = φ if x 0 {T} if 0 ≤ x 1 Ω if x ≥ 1 ◮ Hence FX(y) = P[X ≤ y] is given by FX(y) = 0 if y 0 0.5 if 0 ≤ y 1 1 if y ≥ 1 PS Sastry, IISc, Bangalore, 2020 26/41 ◮ A plot of this distribution function: PS Sastry, IISc, Bangalore, 2020 27/41 ◮ Let us look at another example. ◮ Let Ω = [0, 1] and take events to be Borel subsets of [0, 1]. (That is, F = {B ∩ [0, 1] : B ∈ B}). ◮ We take P to be such that probability of an interval is its length. ◮ This is the ‘usual’ probability space whenever we take Ω = [0, 1]. ◮ Let X(ω) = ω. ◮ We want to find the distribution function of X. PS Sastry, IISc, Bangalore, 2020 28/41

- 29. ◮ Once again we need to find the event [X ≤ x] for different values of x. ◮ Note that the function X takes values in [0, 1] and X(ω) = ω. [X ≤ x] = {ω ∈ Ω : X(ω) ≤ x} = {ω ∈ [0, 1] : ω ≤ x} = φ if x 0 Ω if x ≥ 1 [0, x] if 0 ≤ x 1 ◮ Hence FX(x) = P[X ≤ x] is given by FX(x) = 0 if x 0 x if 0 ≤ x 1 1 if x ≥ 1 PS Sastry, IISc, Bangalore, 2020 29/41 ◮ The plot of this distribution function: PS Sastry, IISc, Bangalore, 2020 30/41 Properties of Distribution Functions ◮ The distribution function of random variable X is given by FX(x) = P[X ≤ x] = P({ω : X(ω) ≤ x}) ◮ Any distribution function should satisfy the following: 1. 0 ≤ FX(x) ≤ 1, ∀x 2. FX(−∞) = 0; FX(∞) = 1 3. FX is non-decreasing: x1 ≤ x2 ⇒ FX(x1) ≤ FX(x2) x1 ≤ x2 ⇒ (−∞, x1] ⊂ (−∞, x2] ⇒ PX((−∞, x1]) ≤ PX((−∞, x2] ⇒ FX(x1) ≤ FX(x2) 4. FX is right continuous and has left-hand limits. PS Sastry, IISc, Bangalore, 2020 31/41 ◮ Right continuity of FX: xn ↓ x ⇒ FX(xn) → FX(x) ◮ xn ↓ x implies the sequence of events (−∞, xn] is monotone decreasing. x x x . . . 1 2 ◮ Also, limn(−∞, xn] = ∩n(−∞, xn] = (−∞, x] ◮ This implies lim n PX((−∞, xn]) = PX(lim n (−∞, xn]) = PX((−∞, x]) ◮ This in turn implies lim xn↓x FX(xn) = FX(x) ◮ Using the usual notation for right limit of a function, we can write FX(x+) = FX(x), ∀x. PS Sastry, IISc, Bangalore, 2020 32/41

- 30. ◮ FX is right-continuous at all x. ◮ Next, let us look at the lefthand limits: limxn↑x FX(xn) ◮ When xn ↑ x, the sequence of events (−∞, xn] is monotone increasing and lim n (−∞, xn] = ∪n(−∞, xn] = (−∞, x) ◮ By sequential continuity of probability, we have lim n PX((−∞, xn]) = PX(lim n (−∞, xn] ) = PX( (−∞, xn) ) ◮ Hence we get FX(x− ) = lim xn↑x FX(xn) = lim n PX((−∞, xn]) = PX( (−∞, xn) ) ◮ Thus, at every x the left limit of FX exists. PS Sastry, IISc, Bangalore, 2020 33/41 ◮ FX is right-continuous: FX(x+ ) = FX(x) = PX( (−∞, x] ) ◮ It has left limits: FX(x− ) = PX( (−∞, x) ) ◮ If A ⊂ B then P(B − A) = P(B) − P(A) ◮ We have (−∞, x] − (−∞, x) = {x}. Hence PX( (−∞, x] )−PX( (−∞, x) ) = PX({x}) = P({ω : X(ω) = x}) ◮ Thus we get FX(x+ ) − FX(x− ) = P[X = x] = P({ω : X(ω) = x}) ◮ When FX is discontinuous at x the height of discontinuity is the probability that X takes that value. ◮ And, if FX is continuous at x then P[X = x] = 0 PS Sastry, IISc, Bangalore, 2020 34/41 Distribution Functions ◮ Let X be a random variable. ◮ Its distribution function, FX : ℜ → ℜ is given by FX(x) = P[X ≤ x] ◮ The distribution function satisfies 1. 0 ≤ FX(x) ≤ 1, ∀x 2. FX(−∞) = 0; FX(∞) = 1 3. FX is non-decreasing: x1 ≤ x2 ⇒ FX(x1) ≤ FX(x2) 4. FX is right continuous and has left-hand limits. ◮ We also have FX(x+ ) − FX(x− ) = P[X = x] ◮ Any real-valued function of a real variable satisfying the above four properties would be a distribution function of some random variable. PS Sastry, IISc, Bangalore, 2020 35/41 ◮ FX(x) = P[X ≤ x] = P[X ∈ (−∞, x] ] ◮ Given FX, we can, in principle, find P[X ∈ B] for all Borel sets. ◮ In particular, for a b, P[a X ≤ b] = P[X ∈ (a, b] ] = P[X ∈ ( (−∞, b] − (−∞, a] ) ] = P[X ∈ (−∞, b] ] − P[X ∈ (−∞, a] ] = FX(b) − FX(a) PS Sastry, IISc, Bangalore, 2020 36/41

- 31. ◮ There are two classes of random variables that we would study here. ◮ These are called discrete and continuous random variables. ◮ There can be random variables that are neither discrete nor continuous. ◮ But these two are important classes of random variables that we deal with in this course. ◮ Note that the distribution function is defined for all random variables. PS Sastry, IISc, Bangalore, 2020 37/41 Discrete Random Variables ◮ A random variable X is said to be discrete if it takes only countably many distinct values. ◮ Countably many means finite or countably infinite. ◮ If X : Ω → ℜ is discrete, its (strict) range is countable ◮ Any random variable that is defined on finite or countable Ω would be discrete. ◮ Thus the family of discrete random variables includes all probability models on finite or countably infinite sample spaces. PS Sastry, IISc, Bangalore, 2020 38/41 Discrete Random Variable Example ◮ Consider three independent tosses of a fair coin. ◮ Ω = {H, T}3 and X(ω) is the number of H’s in ω. ◮ This rv takes four distinct values, namely, 0, 1, 2, 3. ◮ We denote this as X ∈ {0, 1, 2, 3} ◮ Let us find the distribution function of this rv ◮ Let us take some examples of [X ≤ x] [X ≤ 0.72] = {ω : X(ω) ≤ 0.72} = {ω : X(ω) = 0} = [X = 0] [X ≤ 1.57] = {ω : X(ω) ≤ 1.57} = {ω : X(ω) = 0} ∪ {ω : X(ω) = 1} = [X = 0 or 1] PS Sastry, IISc, Bangalore, 2020 39/41 ◮ FX(x) = P[X ≤ x] (Recall X ∈ {0, 1, 2, 3}) ◮ The event [X ≤ x] for different x can be seen to be [X ≤ x] = φ x 0 {TTT} 0 ≤ x 1 {TTT, HTT, THT, TTH} 1 ≤ x 2 Ω − {HHH} 2 ≤ x 3 Ω x ≥ 3 ◮ So, we get the distribution function as FX(x) = 0 x 0 1 8 0 ≤ x 1 4 8 1 ≤ x 2 7 8 2 ≤ x 3 1 x ≥ 3 PS Sastry, IISc, Bangalore, 2020 40/41

- 32. ◮ The plot of this distribution function is: ◮ This is a stair-case function. ◮ It has jumps at x = 0, 1, 2, 3, which are the values that X takes. In between these it is constant. ◮ The jump at, e.g., x = 2 is 3/8 which is the probability of X taking that value. PS Sastry, IISc, Bangalore, 2020 41/41 Recap: Random Variables ◮ Given a probability space (Ω, F, P), a random variable is a real-valued function on Ω. ◮ It essentially results in an induced probability space (Ω, F, P) X → (ℜ, B, PX) where B is the Borel σ-algebra and PX(B) = P[X ∈ B] = P ({ω ∈ Ω : X(ω) ∈ B}) PS Sastry, IISc, Bangalore, 2020 1/52 Recap: σ-algebra ◮ An F ⊂ 2Ω is called a σ-algebra (also called σ-field) on Ω if it satisfies 1. Ω ∈ F 2. A ∈ F ⇒ Ac ∈ F 3. A1, A2, · · · ∈ F ⇒ ∪iAi ∈ F ◮ Thus a σ-algebra is a collection of subsets of Ω that is closed under complements and countable unions (and hence countable intersections). ◮ The Borel σ-algebra (on ℜ), B, is the smallest σ-algebra containing all intervals. ◮ We also have B = σ({(−∞, x] : x ∈ ℜ} PS Sastry, IISc, Bangalore, 2020 2/52 Recap: Distribution function of a random variable ◮ Let X be a random variable. It distribution function, FX : ℜ → ℜ, is defined by FX(x) = P[X ≤ x] = P({ω ∈ Ω : X(ω) ≤ x}) ◮ The distribution function, FX, completely specifies the probability measure, PX. PS Sastry, IISc, Bangalore, 2020 3/52

- 33. Recap: Properties of distribution function ◮ The distribution function satisfies 1. 0 ≤ FX(x) ≤ 1, ∀x 2. FX(−∞) = 0; FX(∞) = 1 3. FX is non-decreasing: x1 ≤ x2 ⇒ FX(x1) ≤ FX(x2) 4. FX is right continuous and has left-hand limits. ◮ Any real-valued function of a real variable satisfying the above four properties would be a distribution function of some random variable. ◮ We also have FX(x+ ) − FX(x− ) = FX(x) − FX(x− ) = P[X = x] P[a X ≤ b] = FX(b) − FX(a). PS Sastry, IISc, Bangalore, 2020 4/52 ◮ There are two classes of random variables that we would study here. ◮ These are called discrete and continuous random variables. ◮ Note that the distribution function is defined for all random variables. PS Sastry, IISc, Bangalore, 2020 5/52 Discrete Random Variables ◮ A random variable X is said to be discrete if it takes only countably many distinct values. ◮ Countably many means finite or countably infinite. PS Sastry, IISc, Bangalore, 2020 6/52 Discrete Random Variable Example ◮ Consider three independent tosses of a fair coin. ◮ Ω = {H, T}3 and X(ω) is the number of H’s in ω. ◮ This rv takes four distinct values, namely, 0, 1, 2, 3. ◮ We denote this as X ∈ {0, 1, 2, 3} ◮ Let us find the distribution function of this rv ◮ Let us take some examples of [X ≤ x] [X ≤ 0.72] = {ω : X(ω) ≤ 0.72} = {ω : X(ω) = 0} = [X = 0] [X ≤ 1.57] = {ω : X(ω) ≤ 1.57} = {ω : X(ω) = 0} ∪ {ω : X(ω) = 1} = [X = 0 or 1] PS Sastry, IISc, Bangalore, 2020 7/52

- 34. ◮ FX(x) = P[X ≤ x] (Recall X ∈ {0, 1, 2, 3}) ◮ The event [X ≤ x] for different x can be seen to be [X ≤ x] = φ x 0 {TTT} 0 ≤ x 1 {TTT, HTT, THT, TTH} 1 ≤ x 2 Ω − {HHH} 2 ≤ x 3 Ω x ≥ 3 ◮ So, we get the distribution function as FX(x) = 0 x 0 1 8 0 ≤ x 1 4 8 1 ≤ x 2 7 8 2 ≤ x 3 1 x ≥ 3 PS Sastry, IISc, Bangalore, 2020 8/52 ◮ The plot of this distribution function is: ◮ This is a stair-case function. ◮ It has jumps at x = 0, 1, 2, 3, which are the values that X takes. In between these it is constant. ◮ The jump at, e.g., x = 2 is 3/8 which is the probability of X taking that value. PS Sastry, IISc, Bangalore, 2020 9/52 ◮ We know that FX(x) − FX(x− ) = P[X = x]. ◮ For example, FX(2) − FX(2− ) = P[X = 2] = P({ω : X(ω) = 2}) = P({THH, HTH, HHT}) = 3 8 ◮ The FX is a stair-case function. ◮ It has jumps at each value assumed by X (and is constant in between) ◮ The height of the jump is equal to the probability of X taking that value. ◮ All discrete random variables would have this general form of distribution function. PS Sastry, IISc, Bangalore, 2020 10/52 ◮ Let X be a dicrete rv and let X ∈ {a1, a2, · · · , an} ◮ As a notation we assume: a1 a2 · · · an ◮ Let [X = ai] = {ω : X(ω) = ai} = Bi and let P(Bi) = qi. ◮ Since X is a function on Ω, B1, · · · , Bn form a partition of Ω. ◮ Note that qi ≥ 0 and Pn i=1 qi = 1. ◮ If x a1 then [X ≤ x] = φ. ◮ If a1 ≤ x a2 then [X ≤ x] = [X = a1] = B1 ◮ If a2 ≤ x a3 then [X ≤ x] = [X = a1] ∪ [X = a2] = B1 + B2 PS Sastry, IISc, Bangalore, 2020 11/52

- 35. ◮ Hence we can write the distribution function as FX(x) = 0 x a1 P(B1) a1 ≤ x a2 P(B1) + P(B2) a2 ≤ x a3 . . . Pk i=1 P(Bi) ak ≤ x ak+1 . . . 1 x ≥ an ◮ We can write this compactly as FX(x) = X k:ak≤x qk ◮ Note that all this holds even when X takes countably infinitely many values. PS Sastry, IISc, Bangalore, 2020 12/52 ◮ Let X be a discrete rv with X ∈ {x1, x2, · · · }. ◮ Let qi = P[X = xi] (= P({ω : X(ω) = xi}) ) ◮ We have qi ≥ 0 and P i qi = 1. ◮ If X is discrete then there is a countable set E such that P[X ∈ E] = 1. ◮ The distribution function of X is specified completely by these qi PS Sastry, IISc, Bangalore, 2020 13/52 probability mass function, fX ◮ Let X be a discrete rv with X ∈ {x1, x2, · · · }. ◮ The probability mass function (pmf) of X is defined by fX(xi) = P[X = xi]; fX(x) = 0, for all other x ◮ fX is also a real-valued function of a real variable. ◮ We can write the definition compactly as fX(x) = P[X = x] ◮ The distribution function (df) and the pmf are related as FX(x) = X i:xi≤x fX(xi) fX(x) = FX(x) − FX(x− ) ◮ We can get pmf from df and df from pmf. PS Sastry, IISc, Bangalore, 2020 14/52 Properties of pmf ◮ The probability mass function of a discrete random variable X ∈ {x1, x2, · · · } satisfies 1. fX(x) ≥ 0, ∀x and fX(x) = 0 if x 6= xi for some i 2. P i fX(xi) = 1 ◮ Any function satisfying the above two would be a pmf of some discrete random variable. ◮ We can specify a discrete random variable by giving either FX or fX. ◮ Please remember that we have defined distribution function for any random variable. But pmf is defined only for discrete random variables PS Sastry, IISc, Bangalore, 2020 15/52

- 36. ◮ Any discrete random variable can be specified by ◮ giving the set of values of X, {x1, x2, · · · }, and ◮ numbers qi such that qi = P[X = xi] = fX(xi) ◮ Note that we must have qi ≥ 0 and P i qi = 1. ◮ As we saw this is how we can specify a probability assignment on any countable sample space. PS Sastry, IISc, Bangalore, 2020 16/52 Computations of Probabilities for discrete rv’s ◮ A discrete random variable is specified by giving either df or pmf. One can be obtained from the other. ◮ We normally specify it through the pmf. ◮ Given X ∈ {x1, x2, · · · } and fX, we can (in principle) compute probability of any event P[X ∈ B] = X i: xi∈B fX(xi) ◮ For example, if X ∈ {0, 1, 2, 3} then P[X ∈ [0.5, 1.32] ∪ [2.75, 5.2] ] = fX(1) + fX(3) ◮ We next look at some standard discrete random variable models PS Sastry, IISc, Bangalore, 2020 17/52 Bernoulli Distribution ◮ Bernoulli random variable: X ∈ {0, 1} with fX(1) = p; fX(0) = 1−p; where 0 p 1 is a parameter ◮ This fX is easily seen to be a pmf ◮ Consider (Ω, F, P) with B ∈ F. (The Ω here may be uncountable). ◮ Consider the random variable IB(ω) = 0 if ω / ∈ B 1 if ω ∈ B ◮ It is called indicator (random variable) of B. ◮ P[IB = 1] = P({ω : IB(ω) = 1}) = P(B) ◮ Thus, this indicator rv has Bernoulli distribution with p = P(B) PS Sastry, IISc, Bangalore, 2020 18/52 One of the df examples we saw earlier is that of Bernoulli PS Sastry, IISc, Bangalore, 2020 19/52

- 37. Binomial Distribution ◮ X ∈ {0, 1, · · · , n} with pmf fX(k) = n Ck pk (1 − p)n−k , k = 0, 1, · · · , n where n, p are parameters (n is a +ve integer and 0 p 1). ◮ This is easily seen to be a pmf n X k=0 n Ck pk (1 − p)n−k = (p + 1 − p)n = 1 ◮ Consider n independent tosses of coin whose probability of heads is p. If X is the number of heads then X has the above binomial distribution. (Number of successes in n bernoulli trials) ◮ Any one outcome (a seq of length n) with k heads would have probability pk (1 − p)n−k . There are n Ck outcomes with exactly k heads. PS Sastry, IISc, Bangalore, 2020 20/52 One of the df examples we considered was that of Binomial PS Sastry, IISc, Bangalore, 2020 21/52 Poisson Distribution ◮ X ∈ {0, 1, 2, · · · } with pmf fX(k) = λk e−λ k! , k = 0, 1, 2, · · · where λ 0 is a parameter. ◮ We can see this to be a pmf by ∞ X k=0 λk k! e−λ = eλ e−λ = 1 ◮ Poisson distribution is also useful in many applications PS Sastry, IISc, Bangalore, 2020 22/52 Geometric Distribution ◮ X ∈ {1, 2, · · · } with pmf fX(k) = (1 − p)k−1 p, k = 1, 2, · · · where 0 p 1 is a parameter. ◮ Consider tossing a coin (with prob of H being p) repeatedly till we get a head. X is the toss number on which we got the first head. ◮ In general waiting for ‘success’ in independent Bernoulli trials. PS Sastry, IISc, Bangalore, 2020 23/52

- 38. Memoryless property of geometric distribution ◮ Suppose X is a geometric rv. Let m, n be positive integers. ◮ We want to calculate P( [X m + n] | [X m] ) (Remember that [X m] etc are events) ◮ Let us first calculate P[X n] for any positive integer n P[X n] = ∞ X k=n+1 P[X = k] = ∞ X k=n+1 (1 − p)k−1 p = p (1 − p)n 1 − (1 − p) = (1 − p)n (Does this also tell us what is df of geometric rv?) PS Sastry, IISc, Bangalore, 2020 24/52 ◮ Now we can compute the required conditional probability P[X m + n|X m] = P[X m + n, X m] P[X m] = P[X m + n] P[X m] = (1 − p)m+n (1 − p)m = (1 − p)n ⇒ P[X m + n|X m] = P[X n] ◮ This is known as the memoryless property of geometric distribution ◮ Same as P[X m + n] = P[X m]P[X n] PS Sastry, IISc, Bangalore, 2020 25/52 ◮ If X is a geometric random variable, it satisfies P[X m + n|X m] = P[X n] ◮ This is same as P[X m + n] = P[X m]P[X n] ◮ Does it say that [X m] is independent of [X n] ◮ NO! Because [X m + n] is not equal to intersection of [X m] and [X n] PS Sastry, IISc, Bangalore, 2020 26/52 Memoryless property defines geometric rv ◮ Suppose X ∈ {0, 1, · · · } is a discrete rv satisfying, for all non-negative integers, m, n P[X m + n] = P[X m]P[X n] ◮ We will show that X has geometric distribution ◮ First, note that P[X 0] = P[X 0 + 0] = (P[X 0])2 ⇒ P[X 0] is either 1 or 0. ◮ Let us take P[X 0] = 1 (and hence P[X = 0] = 0). PS Sastry, IISc, Bangalore, 2020 27/52

- 39. ◮ We have, for any m, P[X m] = P[X (m − 1) + 1] = P[X m − 1]P[X 1] = P[X m − 2] (P[X 1])2 ◮ Let q = P[X 1]. Iterating on the above, we get P[X m] = P[X 0] (P[X 1])m = qm ◮ Using this, we can get pmf of X as P[X = m] = P[X m−1]−P[X m] = qm−1 −qm = qm−1 (1−q) ◮ This is pmf of geometric (with q = (1 − p)) PS Sastry, IISc, Bangalore, 2020 28/52 Continuous Random Variables ◮ A rv, X, is said to be continuous (or of continuous type) if its distribution function, FX is absolutely continuous. PS Sastry, IISc, Bangalore, 2020 29/52 Absolute Continuity ◮ A function g : ℜ → ℜ is absolutely continuous on an interval, I, if given any ǫ 0 there is a δ 0 such that for any finite sequence of pair-wise disjoint subintervals, (xk, yk), with xk, yk ∈ I, ∀k, satisfying P k(yk − xk) δ, we have P k |f(yk) − f(xk)| ǫ ◮ A function that is absolutely continuous on a (finite) closed interval is uniformly continuous. ◮ If g is absolutely continuous on [a, b] then there exists an integrable function h such that g(x) = g(a) + Z x a h(t) dt, ∀x ∈ [a, b] ◮ In the above, g would be differentiable almost everywhere and h would be its derivative (wherever g is differentiable). PS Sastry, IISc, Bangalore, 2020 30/52 Continuous Random Variables ◮ A rv, X, is said to be continuous (or of continuous type) if its distribution function, FX is absolutely continuous. ◮ That is, if there exists a function fX : ℜ → ℜ such that FX(x) = Z x −∞ fX(t) dt, ∀x ◮ fX is called the probability density function (pdf) of X. ◮ Note that FX here is continuous ◮ By the fundamental theorem of claculus, we have dFx(x) dx = fX(x), ∀x where fX is continuous PS Sastry, IISc, Bangalore, 2020 31/52

- 40. Continuous Random Variables ◮ If X is a continuous rv then its distribution function, FX, is continuous. ◮ Hence a discrete random variable is not a continuous rv! ◮ If a rv takes countably many values then it is discrete. ◮ However, if a rv takes uncoutably infinitely many distinct values, it does not necessarily imply it is of continuous type. ◮ As mentioned earlier, there would be many random variables that are neither discrete nor continuous. PS Sastry, IISc, Bangalore, 2020 32/52 Continuous Random Variables ◮ The df of a continuous rv is continuous. ◮ This implies FX(x) = FX(x+ ) = FX(x− ) ◮ Hence, if X is a continuous random variable then P[X = x] = FX(x) − FX(x− ) = 0, ∀x PS Sastry, IISc, Bangalore, 2020 33/52 Continuous Random Variables ◮ A rv, X, is said to be continuous (or of continuous type) if its distribution function, FX is absolutely continuous. ◮ The df of a continuous random variable can be written as FX(x) = Z x −∞ fX(t) dt, ∀x ◮ This fX is the probability density function (pdf) of X. dFx(x) dx = fX(x), ∀x where fX is continuous PS Sastry, IISc, Bangalore, 2020 34/52 Probability Density Function ◮ The pdf of a continuous rv is defined to be the fX that satisfies FX(x) = Z x −∞ fX(t) dt, ∀x ◮ Since FX(∞) = 1, we must have R ∞ −∞ fX(t) dt = 1 ◮ For x1 ≤ x2 we need FX(x1) ≤ FX(x2) and hence we need Z x1 −∞ fX(t) dt ≤ Z x2 −∞ fX(t) dt ⇒ Z x2 x1 fX(t) dt ≥ 0, ∀x1 x2 ⇒ fX(x) ≥ 0, ∀x PS Sastry, IISc, Bangalore, 2020 35/52

- 41. Properties of pdf ◮ The pdf, fX : ℜ → ℜ, of a continuous rv satisfies A1. fX(x) ≥ 0, ∀x A2. R ∞ −∞ fX(t) dt = 1 ◮ Any fX that satisfies the above two would be the probability density function of a continuous rv ◮ Given fX satifying the above two, define FX(x) = Z x −∞ fX(t) dt, ∀x This FX satisfies 1. FX(−∞) = 0; FX(∞) = 1 2. FX is non decreasing. 3. FX is continuous (and hence right continuous with left limits) ◮ This shows the the FX is a df and hence fX is a pdf PS Sastry, IISc, Bangalore, 2020 36/52 Continuous rv – example ◮ Consider a probability space with Ω = [0, 1] and with the ‘usual’ probability assignment (where probability of an interval is its length) ◮ Earlier we considered the rv X(ω) = ω on this probability space. ◮ We found that the df for this is FX(x) = 0 if x 0 x if 0 ≤ x 1 1 if x ≥ 1 This is absolutely continuous and we can get the pdf as fX(x) = 1 if 0 x 1; (fX(x) = 0, otherwise) ◮ On the same probability space, consider rv Y (ω) = 1 − ω. ◮ Let us find FY and fY . PS Sastry, IISc, Bangalore, 2020 37/52 ◮ Y (ω) = 1 − ω. [Y ≤ y] = {ω : Y (ω) ≤ y} = {ω ∈ [0, 1] : 1 − ω ≤ y} = {ω ∈ [0, 1] : ω ≥ 1 − y} = φ if y 0 Ω if y ≥ 1 [1 − y, 1] if 0 ≤ y 1 ◮ Hence the df of Y is FY (y) = 0 if y 0 y if 0 ≤ y 1 1 if y ≥ 1 ◮ We have FX = FY and thus fX = fY . (However, note that X(ω) 6= Y (ω) except at ω = 0.5). PS Sastry, IISc, Bangalore, 2020 38/52 ◮ Let X be a continuous rv. ◮ It can be specified by giving either FX or the pdf, fX. ◮ We can, in principle, compute probability of any event as P[X ∈ B] = Z B fX(t) dt, ∀B ∈ B ◮ In particular, we have ‘ P[X ∈ [a, b]] = P[a ≤ X ≤ b] = Z b a fX(t) dt = FX(b)−FX(a) ◮ Since the integral over the open or closed intervals is the same, we have, for continuous rv, P[a ≤ X ≤ b] = P[a X ≤ b] = P[a ≤ X b] etc. ◮ Recall that for a general rv FX(b) − FX(a) = P[a X ≤ b] PS Sastry, IISc, Bangalore, 2020 39/52

- 42. ◮ If X is a continuous rv, we have P[a ≤ X ≤ b] = Z b a fX(t) dt ◮ Thus P[x ≤ X ≤ x + ∆x] = Z x+∆x x fX(t) dt ≈ fX(x) ∆x ◮ That is why fX is called probability density function. PS Sastry, IISc, Bangalore, 2020 40/52 ◮ For any random variable, the df is defined and it is given by FX(x) = P[X ≤ x] = P[X ∈ (−∞, x] ] ◮ The value of FX(x) at any x is probability of some event. ◮ The pmf is defined only for discrete random variables as fX(x) = P[X = x] ◮ The value of pmf is also a probability ◮ We use the same symbol for pdf (as for pmf), defined by FX(x) = Z x −∞ fX(x) dx ◮ Note that the value of pdf is not a probability. ◮ We can say fX(x) dx ≈ P[x ≤ X ≤ x + dx] PS Sastry, IISc, Bangalore, 2020 41/52 A note on notation ◮ The df, FX, and the pmf or pdf, fX, are all functions defined on ℜ. ◮ Hence you should not write FX(X ≤ 5). You should write FX(5) to denote P[X ≤ 5]. ◮ For a discrete rv, X, one should not write fX(X = 5). It is fX(5) which gives P[X = 5]. ◮ Writing fX(X = 5) when fX is a pdf, is particularly bad. Note that for a continuous rv, P[X = 5] = 0 and fX(5) 6= P[X = 5]. PS Sastry, IISc, Bangalore, 2020 42/52 ◮ A continuous random variable is a probability model on uncountably infinite Ω. ◮ For this, we take ℜ as our sample space. ◮ We can specify a continuous rv either through the df or through the pdf. ◮ The df, FX, of a cont rv allows you to (consistently) assign probabilities to all Borel subsets of real line. ◮ We next consider a few standard continuous random variables. PS Sastry, IISc, Bangalore, 2020 43/52

- 43. Uniform distribution ◮ X is uniform over [a, b] when its pdf is fX(x) = 1 b − a , a ≤ x ≤ b (fX(x) = 0 for all other values of x). ◮ Uniform distribution over open or closed interval is essentially the same. ◮ When X has this distribution, we say X ∼ U[a, b] ◮ By integrating the above, we can see the df as FX(x) = R x −∞ fX(x) dx = R x −∞ 0 dx = 0 if x a R a −∞ 0 dx + R x a 1 b−a dx = x−a b−a if a ≤ x b 0 + R b a 1 b−a dx + 0 = 1 if x ≥ b PS Sastry, IISc, Bangalore, 2020 44/52 ◮ A plot of density and distribution functions of a uniform rv is given below PS Sastry, IISc, Bangalore, 2020 45/52 ◮ Let X ∼ U[a, b]. Then fX(x) = 1 b−a , a ≤ x ≤ b ◮ Let [c, d] ⊂ [a, b]. ◮ Then P[X ∈ [c, d]] = R d c fX(t) dt = d−c b−a ◮ Probability of an interval is proportional to its length. ◮ The earlier examples we considered are uniform over [0, 1]. PS Sastry, IISc, Bangalore, 2020 46/52 Exponential distribution ◮ The pdf of exponential distribution is fX(x) = λ e−λx , x 0, (λ 0 is a parameter) (By our notation, fX(x) = 0 for x ≤ 0) ◮ It is easy to verify R ∞ 0 fX(x) dx = 1. ◮ It is easy to see that Fx(x) = 0 for x ≤ 0. ◮ For x 0 we can compute FX by integrating fX: FX(x) = Z x 0 λ e−λx dx = λ e−λx −λ x 0 = 1 − e−λx ◮ This also gives us: P[X x] = 1 − FX(x) = e−λx for x 0. PS Sastry, IISc, Bangalore, 2020 47/52

- 44. ◮ A plot of density and distribution functions of an exponential rv is given below PS Sastry, IISc, Bangalore, 2020 48/52 exponential distribution is memoryless ◮ If X has exponential distribution, then, for t, s 0, P[X t+s] = e−λ(t+s) = e−λt e−λs = P[X t] P[X s] ◮ This gives us the memoryless property P[X t + s | X t] = [P[X t + s] P[X t] = P[X s] ◮ Exponential distribution is a useful model for, e.g., life-time of components. ◮ If the distribution of a non-negative continuous random variable is memory less then it must be exponential. PS Sastry, IISc, Bangalore, 2020 49/52 Gaussian Distribution ◮ The pdf of Gaussian distribution is given by fX(x) = 1 σ √ 2π e− (x−µ)2 2σ2 , −∞ x ∞ where σ 0 and µ ∈ ℜ are parameters. ◮ We write X ∼ N(µ, σ2 ) to denote that X has Gaussian density with parameters µ and σ. ◮ This is also called the Normal distribution. ◮ The special case where µ = 0 and σ2 = 1 is called standard Gaussian (or standard Normal) distribution. PS Sastry, IISc, Bangalore, 2020 50/52 ◮ A plot of Gaussian density functions is given below PS Sastry, IISc, Bangalore, 2020 51/52

- 45. ◮ fX(x) = 1 σ √ 2π e− (x−µ)2 2σ2 , −∞ x ∞ ◮ Showing that the density integrates to 1 is not trivial. ◮ Take µ = 0, σ = 1. Let I = R ∞ −∞ fX(x) dx. Then I2 = Z ∞ −∞ 1 √ 2π e−0.5x2 dx Z ∞ −∞ 1 √ 2π e−0.5y2 dy = Z ∞ −∞ Z ∞ −∞ 1 2π e−0.5(x2+y2) dx dy ◮ Now converting the above integral into polar coordinates would allow you to show I = 1. (Left as an exercise for you!) PS Sastry, IISc, Bangalore, 2020 52/52 Recap: Random Variable ◮ Given a probability space (Ω, F, P), a random variable is a real-valued function on Ω. ◮ It essentially results in an induced probability space (Ω, F, P) X → (ℜ, B, PX) where B is the Borel σ-algebra and PX(B) = P[X ∈ B] = P ({ω ∈ Ω : X(ω) ∈ B}) ◮ For X to be a random variable {ω ∈ Ω : X(ω) ∈ B} ∈ F, ∀B ∈ B PS Sastry, IISc, Bangalore, 2020 1/43 Recap: Distribution Function ◮ Let X be a random variable. It distribution function, FX : ℜ → ℜ, is defined by FX(x) = P[X ≤ x] = P({ω ∈ Ω : X(ω) ≤ x}) ◮ The distribution function, FX, completely specifies the probability measure, PX. ◮ The distribution function satisfies 1. 0 ≤ FX(x) ≤ 1, ∀x 2. FX(−∞) = 0; FX(∞) = 1 3. FX is non-decreasing: x1 ≤ x2 ⇒ FX(x1) ≤ FX(x2) 4. FX is right continuous and has left-hand limits. ◮ We also have FX(x+ ) − FX(x− ) = FX(x) − FX(x− ) = P[X = x] P[a X ≤ b] = FX(b) − FX(a). PS Sastry, IISc, Bangalore, 2020 2/43 Recap: Discrete Random Variable ◮ A random variable X is said to be discrete if it takes only finitely many or countably infinitely many distinct values. ◮ Let X ∈ {x1, x2, · · · } ◮ Its distribution function, FX is a stair-case function with jump discontinuities at each xi and the magnitude of the jump at xi is equal to P[X = xi] PS Sastry, IISc, Bangalore, 2020 3/43

- 46. Recap: probability mass function ◮ Let X ∈ {x1, x2, · · · }. ◮ The probability mass function (pmf) of X is defined by fX(xi) = P[X = xi]; fX(x) = 0, for all other x ◮ It satisfies 1. fX(x) ≥ 0, ∀x and fX(x) = 0 if x 6= xi for some i 2. P i fX(xi) = 1 ◮ We have FX(x) = P i:xi≤x fX(xi) fX(x) = FX(x) − FX(x− ) ◮ We can calculate the probability of any event as P[X ∈ B] = X i: xi∈B fX(xi) PS Sastry, IISc, Bangalore, 2020 4/43 Recap: continuous random variable ◮ X is said to be a continuous random variable if there exists a function fX : ℜ → ℜ satisfying FX(x) = Z x −∞ fX(x) dx The fX is called the probability density function. ◮ Same as saying FX is absolutely continuous. ◮ Since FX is continuous here, we have P[X = x] = FX(x) − FX(x− ) = 0, ∀x ◮ A continuous rv takes uncountably many distinct values. However, not every rv that takes uncountably many values is a continuous rv PS Sastry, IISc, Bangalore, 2020 5/43 Recap: probability density function ◮ The pdf of a continuous rv is defined to be the fX that satisfies FX(x) = Z x −∞ fX(t) dt, ∀x ◮ It satisfies 1. fX(x) ≥ 0, ∀x 2. R ∞ −∞ fX(t) dt = 1 ◮ We can, in principle, compute probability of any event as P[X ∈ B] = Z B fX(t) dt, ∀B ∈ B ◮ In particular, P[a ≤ X ≤ b] = Z b a fX(t) dt PS Sastry, IISc, Bangalore, 2020 6/43 Recap: some discrete random variables ◮ Bernoulli: X ∈ {0, 1}; parameter: p, 0 p 1 fX(1) = p; fX(0) = 1 − p ◮ Binomial: X ∈ {0, 1, · · · , n}; Parameters: n, p fX(x) = n Cxpx (1 − p)n−x , x = 0, · · · , n ◮ Poisson: X ∈ {0, 1, · · · }; Parameter: λ 0. fX(x) = e−λ λx x! , x = 0, 1, · · · ◮ Geometric: X ∈ {1, 2, · · · }; Parameter: p, 0 p 1. fX(x) = p(1 − p)x−1 , x = 1, 2, · · · PS Sastry, IISc, Bangalore, 2020 7/43

- 47. Recap: Some continuous random variables ◮ Uniform over [a, b]: Parameters: a, b, b a. fX(x) = 1 b − a , a ≤ x ≤ b. ◮ exponential: Parameter: λ 0. fX(x) = λe−λx , x ≥ 0. ◮ Gaussian (Normal): Parameters: σ 0, µ. fX(x) = 1 σ √ 2π e− (x−µ)2 2σ2 , −∞ x ∞ PS Sastry, IISc, Bangalore, 2020 8/43 Functions of a random variable ◮ We next look at random variables defined in terms of other random variables. PS Sastry, IISc, Bangalore, 2020 9/43 ◮ Let X be a rv on some probability space (Ω, F, P). ◮ Recall that X : Ω → ℜ. ◮ Also recall that [X ∈ B] , {ω : X(ω) ∈ B} ∈ F, ∀B ∈ B PS Sastry, IISc, Bangalore, 2020 10/43 Functions of a Random Variable ◮ Let X be a rv on some probability space (Ω, F, P). (Recall X : Ω → ℜ) ◮ Consider a function g : ℜ → ℜ ◮ Let Y = g(X). Then Y also maps Ω into real line. ◮ If g is a ‘nice’ function, Y would also be a random variable ◮ We need: g−1 (B) , {z ∈ ℜ : g(z) ∈ B} ∈ B, ∀B ∈ B PS Sastry, IISc, Bangalore, 2020 11/43

- 48. ◮ Let X be a rv and let Y = g(X). ◮ The distribution function of Y is given by FY (y) = P[Y ≤ y] = P[g(X) ≤ y] = P[g(X) ∈ (−∞, y] ] = P[X ∈ {z : g(z) ≤ y}] ◮ This probability can be obtained from distribution of X. ◮ Thus, in principle, we can find the distribution of Y if we know that of X PS Sastry, IISc, Bangalore, 2020 12/43 Example ◮ Let Y = aX + b, a 0. ◮ Then we have FY (y) = P[Y ≤ y] = P[aX + b ≤ y] = P[aX ≤ y − b] = P X ≤ y − b a , since a 0 = FX y − b a ◮ This tells us how to find df of Y when it is an affine function of X. ◮ If X is continuous rv, then, fY (y) = 1 a fX y−b a PS Sastry, IISc, Bangalore, 2020 13/43 ◮ In many examples we would be using uniform random variables. ◮ Let X ∼ U[0, 1]. Its pdf is fX(x) = 1, 0 ≤ x ≤ 1. ◮ Integrating this we get the df: FX(x) = x, 0 ≤ x ≤ 1 PS Sastry, IISc, Bangalore, 2020 14/43 ◮ Let X ∼ U[−1, 1]. The pdf would be fX(x) = 0.5, −1 ≤ x ≤ 1. ◮ Integrating this, we get the df: FX(x) = 1+x 2 for −1 ≤ x ≤ 1. ◮ These are plotted below PS Sastry, IISc, Bangalore, 2020 15/43

- 49. ◮ Suppose X ∼ U[0, 1] and Y = aX + b ◮ The df for Y would be FY (y) = FX y − b a = 0 y−b a ≤ 0 y−b a 0 ≤ y−b a ≤ 1 1 y−b a ≥ 1 ◮ Thus we get the df for Y as FY (y) = 0 y ≤ b y−b a b ≤ y ≤ a + b 1 y ≥ a + b ◮ Hence fY (y) = 1 a , y ∈ [b, a + b] and Y ∼ U[b, a + b]. ◮ If X ∼ U[0, 1] then Y = aX + b, (a 0), is uniform over [b, a + b]. PS Sastry, IISc, Bangalore, 2020 16/43 ◮ Recall that Gaussian density is f(x) = 1 σ √ 2π e− (x−µ)2 2σ2 ◮ We denote this as N(µ, σ2 ) ◮ Let Y = aX + b where X ∼ N(0, 1). The df of Y is FY (y) = FX y − b a = Z y−b a −∞ 1 √ 2π e− x2 2 dx we make a substitution: t = ax + b ⇒ x = t − b a , and dx = 1 a dt FY (y) = Z y −∞ 1 a √ 2π e− (t−b)2 2a2 dt ◮ This shows that Y ∼ N(b, a2 ) PS Sastry, IISc, Bangalore, 2020 17/43 ◮ Suppose X is a discrete rv with X ∈ {x1, x2, · · · }. ◮ Suppose Y = g(X). ◮ Then Y is also discrete and Y ∈ {g(x1), g(x2), · · · }. ◮ Though we use this notation, we should note: 1. these values may not be distinct (it is possible that g(xi) = g(xj)); 2. g(x1) may not be the smallest value of Y and so on. ◮ We can find the pmf of Y as fY (y) = p[Y = y] = P[g(X) = y] = P[X ∈ {xi : g(xi) = y}] = X i: g(xi)=y fX(xi) PS Sastry, IISc, Bangalore, 2020 18/43 ◮ Let X ∈ {1, 2, · · · , N} with fX(k) = 1 N , 1 ≤ k ≤ N ◮ Let Y = aX + b, (a 0). ◮ Then Y ∈ {b + a, b + 2a, · · · , b + Na}. ◮ We get the pmf of Y as fY (b + ka) = fX(k) = 1 N , 1 ≤ k ≤ N PS Sastry, IISc, Bangalore, 2020 19/43

- 50. ◮ Suppose X is geometric: fX(k) = (1 − p)k−1 p, k = 1, 2, · · · . ◮ Let Y = X − 1 ◮ We get the pmf of Y as fY (j) = P[X − 1 = j] = P[X = j + 1] = (1 − p)j p, j = 0, 1, · · · PS Sastry, IISc, Bangalore, 2020 20/43 ◮ Suppose X is geometric. (fX(k) = (1 − p)k−1 p) ◮ Let Y = max(X, 5) ⇒ Y ∈ {5, 6, · · · } ◮ We can calculate the pmf of Y as fY (5) = P[max(X, 5) = 5] = 5 X k=1 fX(k) = 1 − (1 − p)5 fY (k) = P[max(X, 5) = k] = P[X = k] = (1 − p)k−1 p, k = 6, 7, · · · PS Sastry, IISc, Bangalore, 2020 21/43 ◮ We next consider Y = h(X) where h(x) = x if x 0 0 otherwise ◮ This is written as Y = X+ to indicate the function only keeps the positive part. PS Sastry, IISc, Bangalore, 2020 22/43 ◮ Let X ∼ U[−1, 1]: FX(x) = 1+x 2 for −1 ≤ x ≤ 1. ◮ Let Y = X+ . That is, Y = X+ = X if X 0 0 otherwise ◮ For y 0, FY (y) = P[Y ≤ y] = 0 because Y ≥ 0. ◮ FY (0) = P[Y ≤ 0] = P[X ≤ 0] = 0.5. ◮ For 0 y 1, FY (y) = P[Y ≤ y] = P[X ≤ y] = 1+y 2 ◮ For y ≥ 1, FY (y) = 1. ◮ Thus, the df of Y is FY (y) = 0 if y 0 0.5 if y = 0 1+y 2 if 0 y 1 1 if y ≥ 1 PS Sastry, IISc, Bangalore, 2020 23/43

- 51. ◮ The df of Y is FY (y) = 0 if y 0 1+y 2 if 0 ≤ y 1 1 if y ≥ 1 ◮ This is plotted below ◮ This is neither a continuous rv nor a discrete rv. PS Sastry, IISc, Bangalore, 2020 24/43 ◮ Let Y = X2 . ◮ For y 0, FY (y) = P[Y ≤ y] = 0 (since Y ≥ 0) ◮ For y ≥ 0, we can get FY (y) as FY (y) = P[Y ≤ y] = P[X2 ≤ y] = P[− √ y ≤ X ≤ √ y] = FX( √ y) − FX(− √ y) + P[X = − √ y] ◮ If X is a continuous random variable, then we get fY (y) = d dy (FX( √ y) − FX(− √ y)) = 1 2 √ y [fX( √ y) + fX(− √ y)] ◮ This is the general formula for density of X2 when X is continuous rv. PS Sastry, IISc, Bangalore, 2020 25/43 ◮ Let X ∼ N(0, 1): fX(x) = 1 √ 2π e− x2 2 ◮ Let Y = X2 . Then we know fY (y) = 0 for y 0. For y ≥ 0, fY (y) = 1 2 √ y [fX( √ y) + fX(− √ y)] = 1 2 √ y 1 √ 2π e− y 2 + 1 √ 2π e− y 2 = 1 2 √ y 2 √ 2π e− y 2 = 1 √ π 1 2 0.5 y−0.5 e− 1 2 y ◮ This is an example of gamma density. PS Sastry, IISc, Bangalore, 2020 26/43 Gamma density ◮ The Gamma function is given by Γ(α) = Z ∞ 0 xα−1 e−x dx It can be easily verified that Γ(α + 1) = αΓ(α). ◮ The Gamma density is given by f(x) = 1 Γ(α) λα xα−1 e−λx = 1 Γ(α) (λx)α−1 λe−λx , x 0 ◮ Here α, λ 0 are parameters. ◮ The earlier density we saw corresponds to α = λ = 0.5: fY (y) = 1 √ π 1 2 0.5 y−0.5 e− 1 2 y , y 0 PS Sastry, IISc, Bangalore, 2020 27/43